Recently, Zhang Yi led a team to develop the efficient data flow management software framework Mamba Data Worker (MDW) based on the Beijing Synchrotron Radiation Facility (BSRF), which is an important component of the experimental control and data acquisition software Mamba. The related work is titled "A high throughput big data orchestration and processing system for the High Energy Photon Source" and has been accepted by the academic journal "Journal of Synchrotron Radiation" in the field. It will be published in November this year. This work has been supported by the National Natural Science Foundation of China and the Science and Technology Innovation Program of the Institute of Energy Technology of the Chinese Academy of Sciences. The first author of this paper is Li Xiang, a doctoral student, and the corresponding authors are Zhang Chenglong and Zhang Yi.

The new generation of high-energy synchrotron radiation experiments have characteristics such as high-throughput, in situ, dynamic loading, multimodality, and fast feedback. Sample scanning technology has shifted from one-dimensional step scanning to multi-dimensional continuous scanning. Multidimensional flying scanning technology will be popular, involving a large number of experimental equipment and significantly improving data flux. The demand for synchronization in data acquisition and processing has increased, and the demand for fast feedback control for real-time data analysis is becoming increasingly urgent. The low emissivity and high coherence of high-energy synchrotron radiation sources (HEPS) make small spot experiments more common, with a sharp increase in the number of data points, often accompanied by the linkage of multiple experimental modes. The demand for stability, automation, intelligence, and other aspects in the process of sample loading, sample selection, and focusing has significantly increased. In order to meet these needs, the experimental data transmission path will inevitably undergo a transformation from traditional single channel to multi-channel, as well as a transformation from traditional file based simple manual control to online automated intelligent collection based on data flow. Efficient management of high-throughput and multimodal data streams has become a key element in improving experimental efficiency, as well as in the automation and intelligent workflow of data throughout the entire lifecycle of data collection, analysis, and information mining.

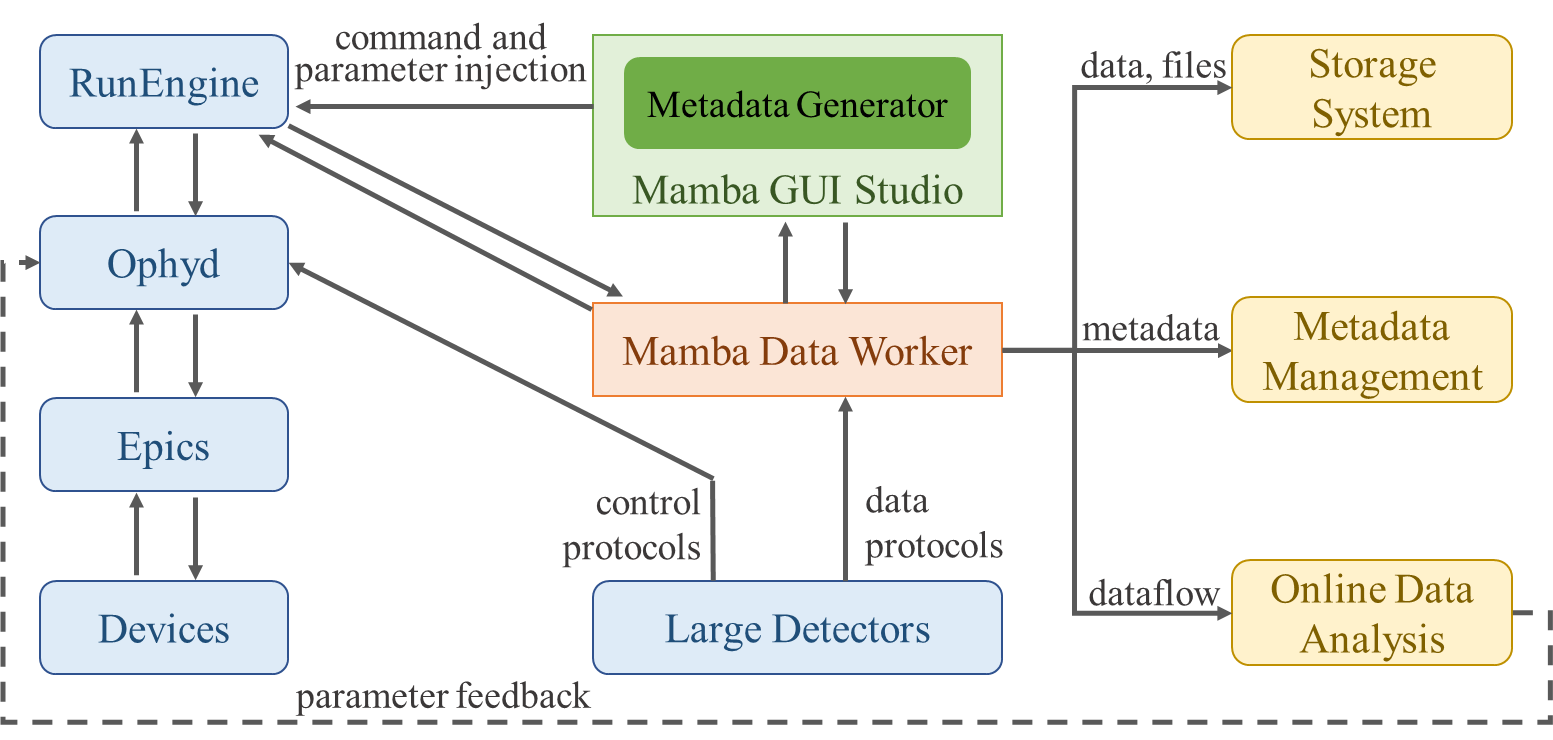

The goal of MDW is to develop a dynamic data flow pipeline creation and scheduling function, which can dynamically and flexibly adjust and allocate data path transmission relationships between multiple endpoints during runtime, and build a more efficient and flexible topological data pipeline network. The visualization workflow engine based on Orange serves as the front-end of MDW, which will facilitate the graphical construction of experimental data distribution and scheduling pathways for different line stations. The increase in scanning dimensions, the complexity of scanning mechanisms, and the increase in data modalities have led to diverse data sources, types, and uneven data flow in data collection, posing significant challenges to data management, alignment, assembly, and writing. In order to better maintain and manage metadata and data, MDW will unify the metadata and data of all devices into HDF5 format, which has excellent characteristics such as clear entries, flexibility, universality, cross platform, and scalability. It will gradually meet the development trend of national scientific data management.

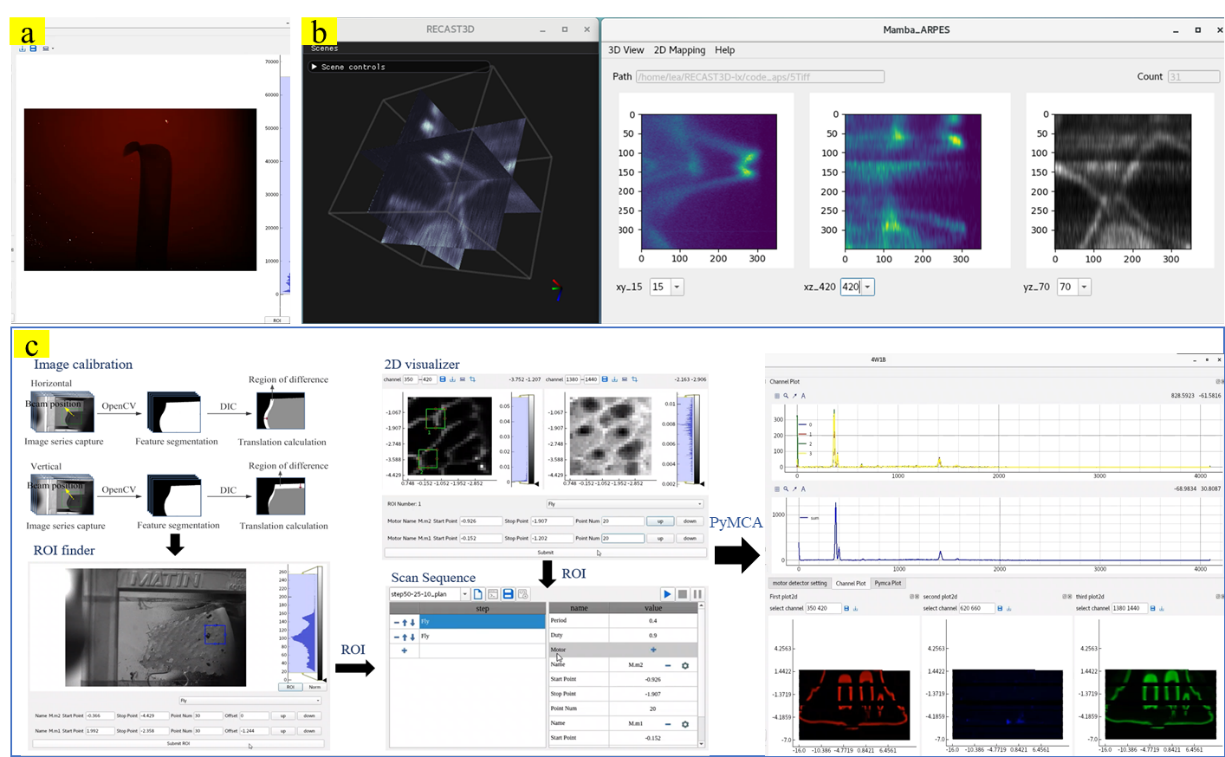

At present, MDW has served multiple rounds of dedicated light in BSRF. Although the current experimental data throughput is not very high, MDW has demonstrated strong versatility and flexibility, supporting experiments with different beamlines, methods, measurement equipment, and scanning mechanisms. For example, in the CT imaging experiment at the 3W1 line station, MDW successfully integrated a self-developed 6k * 6k large-scale surface probe. After the user started collecting, each image data collected could be displayed in real time, and the data could be assembled into HDF5 in real time, which could be directly injected into third-party data processing software for real-time reconstruction. In the fluorescence scanning (XRF Mapping) experiment at the 4W1B line station, MDW successfully docked with the specialized hardware PandABox and Xpres3 required for high-frequency communication in the flying scan, achieving real-time direct injection of data streams into PyMCA and other lightweight data processing algorithms such as spectral calibration and peak fitting. This facilitates users to quickly judge the accuracy of the experiment and select ROI for subsequent collection, achieving real-time data collection, assembly, transmission, visualization, and writing of the data stream, greatly improving experimental efficiency. Roland M ü ller, the leader of the BESSY Light Source Control Group and recipient of the Lifetime Achievement Award from the International Association for Accelerator and Large Experimental Physics Control Systems (ICALEPS) in Germany, sent an email stating that the Mamba project has led the development trend of fourth generation light source experimental control and data acquisition software in areas such as scanning and rapid data acquisition.